research

This page contains some research highlights organized by area. It was last updated in January 2020.

table of contents

machine translation | syntax, parsing, tagging | grammatical error correction | text classification | evaluation | datasets | teachingIn general, I like working on applied research problems and building things that people can use, but am also occasionally distracted by an interesting problem even if it doesn’t have immediate practical use.

My complete list of publications can be found on my publications page.

Machine Translation

Tower of Babel images are popular in the MT community, but this story describes the profusion of languages, i.e., the problem. The story of Pentecost (Acts 2:1–13), wherein the people hear the apostles speaking “each…in our own language to which we were born?”, is a much better fit for the aims of the field, although perhaps a bit more sectarian (not to mention less popular as an artistic subject).

In my thesis work, I tried to integrate phrase-structure parser models as language model into a C++ ITG-based decoder originally written by Hao Zhang. The approach was to parse the source side with the ITG grammar, and then intersect the target chart with a weighted Penn Treebank-derived grammar as a scoring mechanism.

Context-free Treebank-based grammars were well-established as poor language models, exacting a large cost in terms of technical debt and runtime and often not helping at all). Engineered to discriminate amongst legal structures over a sentence whose grammaticality was assumed, they tended to fair poorly when applied to the the language modeling task of distinguishing well-formed sentences in the sea of ungrammaticality. (A clue to this comes from sampling from such grammars, which produces pure garbage.) I wondered if less leaky grammars might work better as language models, but the relatively low parsing complexity for CFGs was desirable. Much of my parsing work looked therefore into learning and using tree substitution grammars, which I hoped would work better as language models while maintaining low parsing complexity. The basic idea was that TSG derivations are “less” context-free in the sense that larger fragments require fewer independent events. I wasn’t successful, however, and joined a long line of frustrating failed work in trying to improve machine translation with syntax. In the end, it turned out that moving to more complicated attribute-value grammars was a smarter move, as Phil Williams later showed.

I spent a number of years using and maintaining Joshua after taking over the project during my post-doc with Chris Callison-Burch. I stopped doing that around 2016, but we published a lot of the improvements at WMT each year, including one of my favorite-titled papers (credit to Juri Ganitkevitch):

In 2017 I spent a year working at Amazon. They had just written Sockeye when I arrived, and I was there for the official release of Amazon Translate in August of 2017. We wrote a paper describing the toolkit.

While there, I contributed a number of features, including source factors and lexically constrained decoding. Constrained decoding allows the user to specify words and phrases that must appear in the decoder output. It’s an important tool in many settings, and allows humans to retain some control and say over the output. David Vilar and I extended nice prior work by Chris Hokamp and Qun Liu; our extension permitted decoding with a fixed-size beam, by dynamically (at each time step) changing the allocation of the beam across different sets of hypotheses.

After returning to JHU in the fall of 2018, I worked with Edward Hu to improve the batch decoding component by vectorizing the dynamic beam allocation. Vectorizing not only improved allocation and search, but also turned up a number of corner cases that were not handled in my original implementation (constrained decoding is quite tricky).

Constrained decoding is a form of paraphrasing—what you’re essentially doing is asking the decoder to produce a paraphrase of what beam-search would have found, subject to the constraints you’ve provided. I’ve also therefore been interested lately in that topic. One difficulty with paraphrasing as a neural MT task is the lack of training data. Working with Ben Van Durme and Edward, we used various heuristically-derived positive and negative constraints to generate pseudo-bitext by backtranslating from Czech–English training data. This can be paired with the original English data to create bitext for training an NMT model. This led to the Parabank project:

Another difficulty with paraphrasing is the difficulty in generating diverse paraphrases. Parabank2 contains a more diverse set of paraphrases created by sampling and clustering back-translations. Each original English sentence is paired with five sentences.

Syntax, parsing, and tagging

I must have read Sharon Goldwater’s Cognition article applying the Dirichlet Process model to word segmentation ten times in graduate school. That allowed me to apply it to a related segmentation task of learning tree substitution grammar (TSG) fragments from treebank style trees.

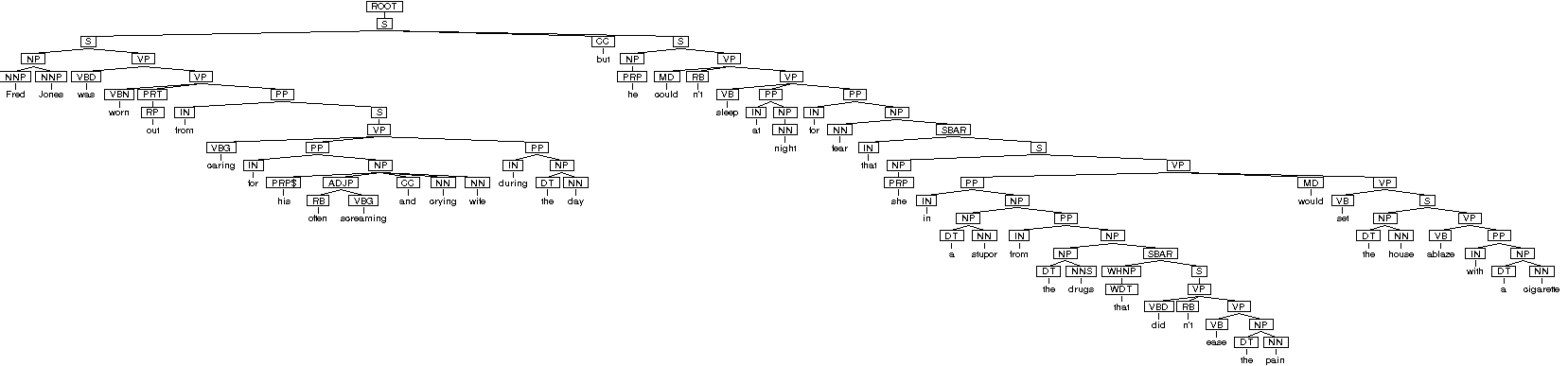

My goal in learning these grammars was to build better language models for machine translation, as part of my thesis. I hoped that using larger fragments might help loosen the independence assumptions that were possibly at the root of the failure of CFG-based parsing models to provide syntactic guidance in language modeling. TSG fragments learned in this way capture natural syntactic constructions (such as the lexicalized predicate-argument structure of a verb). Although parsing with TSGs is still context-free, the hope was that they could work as better language models since a tree could be derived with fewer independent decisions.

The following workshop paper provided a clue that they could be better language models:

But I never succeeded in actually improving MT with them.

Incidentally, binarized TSGs created some problems with chart parsing, since intermediate states have zero cost and thus can’t be effectively compared. We applied the idea of weight pushing from deterministic FSAs and showed that it could speed up and improve chart parsing.

Text classification

A fun paper was work I did with Shane Bergsma on stylometric analysis of papers in the ACL Anthology Network. We looked at trying to predict author features such as native language from scientific writing, under the assumption that the task would be more difficult under the more rigid style conventions used in scientific writing. We didn’t have gold standard data, so we used two sets of automatic criteria to create “gilt” data—one set of very strict criteria, and another looser set. One question here was whether syntactic features, including fragments from Tree Substitution Grammar, could be helpful in predicting these features.

(This paper contains an easter egg in one of the figures (Figure 1). Try zooming in 29,000%!)

We also showed that explicit fragments from TSGs could be used just as effectively as the implicit fragment comparisons computed by tree kernels, which had been shown to be effective at many text classification tasks. Our approach was simpler and faster.

Grammatical Error Correction

Fragments from a TSG help with judging grammaticality:

We conducted a ground-truth evaluation of metrics using techniques from WMT, showing that many metrics developed for WMT were not any good! Another group (Roman Grundkiewicz, Marcin Junczys-Dowmunt, and Edward Gillian) had the same idea.

One observation from sentence-level GEC is that it is often unclear how to correct a sentence. In a TACL paper, we suggest there is a spectrum between making minimal corrections to ensure functional grammaticality to making fluency corrections that reword a sentence in a way that sounds more natural to native speakers.

My student (with Ben Van Durme), Keisuke Sakaguchi, applied reinforcement learning in order to train an MT-based GEC system directly against GEC metrics.

He also had a very nice paper making minimal corrections by modeling it as a dependency-parsing task.

Evaluation

Efficient collection of annotations in a model based framework:

I took over running the human evaluation for WMT starting in year 2012. There is a real question about how to produce the gold-standard system rankings from judgments, and over the years, many changes were introduced, often in a somewhat ad-hoc fashion (e.g., A Grain of Salt for the WMT Manual Evaluation (Bojar et al., 2011)). One of my favorite papers in this line of work was Models of Translation Competitions (Hopkins & May, 2013), which argued that the different models for producing this ranking should themselves be subject to empirical evaluation, and proposed perplexity. This inspired work with my colleague (Ben Van Durme) and former student (Keisuke Sakaguchi) to apply the TrueSkill algorithm (used for producing competitive matchups on XBox) to the WMT evaluation. Instead of perplexity, we evaluated this on predicting the actual human pairwise judgments (which we argued was more direct and relevant), and showed that it did better on that task than both the earlier “expected wins” method and the Hopkins & May model:

However, as with fancier models in many situations, the actual system rankings (which aggregate pairwise judgments) produced by the three models doesn’t change much.

While at Amazon, I released SacreBLEU, a tool whose name pleases me to no end.1 SacreBLEU scratched a number of itches. It actually began as a tool to simply download and extract the WMT test sets, since I was tired of doing this in different settings, and in particular dealing with its (malformed) XML format. But its role grew during our efforts to benchmark Sockeye against published papers, where we realized how much variation there was in BLEU scores produced under different tokenizations. Others had made similar observations: see Rico Sennrich’s Twitter complaint about the difficulty of comparing scores, and Kenneth Heafield stern spicy warning contributed to the default Moses script. My “call for clarity” paper quantified this problem and provided an easily-installable tool that produced scores that could be compared across papers.

(It was rejected from ACL 2018—in part I think because I was area chair and so my paper got sent to a “catch-all” area”—which I’m still grumpy about, but it doesn’t really matter.)

I also had a lot of fun creating the poster for this. I drew this freehand using Procreate on an iPad Pro. You can also see an accessible version of the poster which I created for a submission to the 2021 ICLR workshop on Rethining ML Papers.

Datasets

MTurk demographics:

Indian languages dataset: (The translations were collection in annotator’s second languages and are therefore of very low quality. We therefore do not recommend that this be used.)

We produced a four-way parallel dataset, translating transcripts and also providing ASR output. This was a collaborative effort that turned out to be quite forward-looking: in recent years, the dataset has been proven to be useful in recent work on end-to-end speech-to-text translation, in part because of the paucity of similar datasets. The idea was CCB’s; Adam collected all the translations and created the repo, I ran the translation experiments and (I think) did most of the writing, and Gaurav produced the Kaldi lattices. A nice thing about the code repository is that a single script will build from your existing LDC-licenced audio data to provide the parallel data you need. I think this is a good model for sharing data.

Teaching

I taught a machine translation course at JHU with Adam Lopez (2012 and 2014) and Chris Callison-Burch (2012). For the class, we developed a set of five assignments: alignment, decoding, evaluation, reranking, and inflection. We wrote this up for a 2013 TACL paper:

Philipp Koehn arrived at JHU around 2015 and took over teaching of the MT class, so I haven’t had much involvement with it since then.

-

Prior to release, I was worried that the name would be too offensive. I checked with a few French acquaintances and was assured that it wasn’t, since the phrase is pretty antiquated. ↩